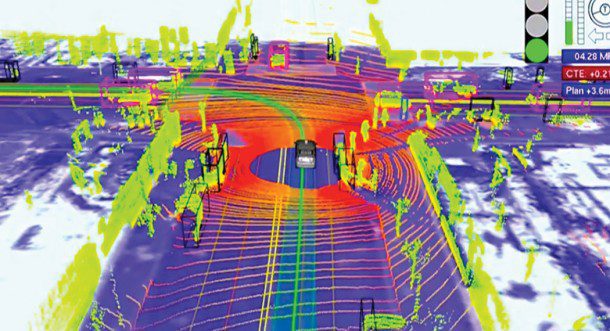

A point cloud image of a vehicle approaching an intersection illustrates the complexity of data collected by Velodyne's HDL-64E LiDAR device.

NAVIGATION

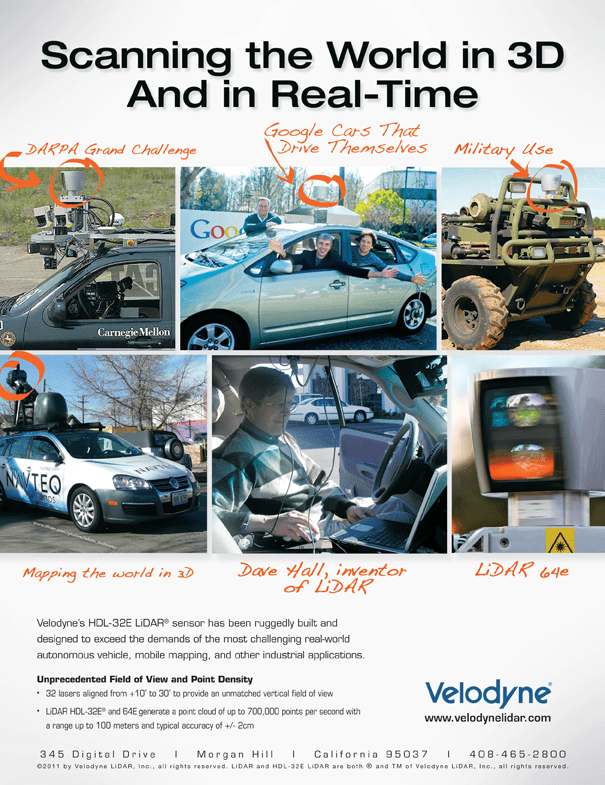

It mounts on top of a vehicle, has 64 lasers that generate millions of data points per second, and is a potential game changer in the world of autonomous vehicle technology.

The HDL-64E LiDAR sensor from Morgan Hill, Calif.-based Velodyne is designed for obstacle detection and navigation of autonomous ground vehicles and marine vessels. Its durability, 360-degree field of view and high data-collection rate makes this sensor ideal for the most demanding real-world perception applications, as well as for effective 3-D mobile data collection and mapping applications.

Velodyne's LiDAR system is the brainchild of visionary entrepreneur and company founder David Hall, who also holds numerous patents in the field of digital audio amplification”he built his first amplifier at the age of four.

Entering the Race

Velodyne’s expertise with laser distance measurement began by participating in the 2005 Grand Challenge sponsored by the Defense Advanced Research Projects Agency (DARPA). A race for autonomous vehicles across theMojave desert, DARPA’s goal was to stimulate autonomous vehicle technology development for both military and commercial applications.

Hall and his brother Bruce entered the competition as Team DAD (Digital Audio Drive). The team initially developed its technology for visualizing the environment with a dual video camera approach, and later developed a laser-based system that became the foundation for Velodyne’s current LiDAR products.

The first Velodyne LIDAR scanner was about 30 inches in diameter and weighed close to 100 pounds. By focusing on commercializing the LiDAR scanner, Velodyne dramatically reduced the sensor’s size and weight while improving its performance.

To date, one of the most newsworthy efforts involving theHDL-64E LiDAR sensor has been its use as an integral component of Google's experimental fleet of self-driving Toyota Priuses, which reportedly now have logged more than 190,000 miles. Stanford University professor Sebastian Thrun, who guides the project along with Google engineer Chris Urmson, describes Velodyne's LiDAR as the heart of the system.

The Google vehicles also carry other sensors, including four radars, mounted on the front and rear bumpers that allow the car to see far enough to deal with fast traffic on freeways; a camera, positioned near the rear-view mirror, that detects traffic lights; and a Global Positioning System inertial measurement unit and wheel encoder that work together to determine the vehicle’s location and keep track of its movements.

According to The New York Times, Google touts safety as one of the top reasons to bring autonomous vehicles to the road. With instant reaction time and 360-degree awareness, engineers believe these autonomous autos have the potential to significantly decrease highway-related deaths and accidents. Unlike human drivers, LiDAR doesn't get tired, become distracted or fall asleep at the wheel.

You may one day get the opportunity to read the paper, eat breakfast, pay a few bills and e-mail your college roommate during your morning commute”all with LiDAR-assisted driving.

For more information, visit Velodyne at www.velodynelidar.com.