Technology innovations are minimizing the processing times and disk space required to

store vast volumes of LiDAR data.

By Mark Kozak and Verne LaClair, PAR Government Systems (www.pargovernment.com), Rome, N.Y.

BIGDATAis a term applied to datasets whose size is beyond the ability of commonly used software tools to capture, manage and process the data within a tolerable elapsed time. Light detection and ranging (LiDAR) data fit this description.

LiDAR technology provides the geospatial community with massive amounts of data for use in a variety of applications. As we continue to collect, process and store this seemingly unending supply of information, the key challenge is maintaining and delivering the data efficiently.

Most LiDAR users agree that the challenge is to decrease data transmission, storage and processing requirements. Thus an ideal, solution would include the following functions for extremely large datasets:

¢ Rapid loading of compressed data

¢ Ability to merge multiple collections

¢ Retrieve by collection, date or footprint

¢ Image cube fusion support

¢ Support processing of compressed data

¢ Remain scalable based on collection resolution and precision

¢ Feature independent”no assumptions made on nature of data

¢ Tunable”numerically adjust to same parameters as data collection

¢Dramatic compression increases with minimal quantization loss

¢ Yield high lossless compression ratio

Individuals in the field”whether responding to natural disasters, protecting national borders or conducting missions abroad”can benefit from detailed LiDAR data. Currently, these large data files must be shipped on storage devices, or specific information of interest must be extracted and processed to a size and format supported by current bandwidth.

Data Compression Bridges the Gap

General data compression techniques such as .zip and .rar have significantly pared down the amount of space necessary to house data libraries. However, users still need to organize, manage and deliver volumes of compressed point data. Such tasks can be daunting. Storage requirements can rapidly grow to tens or hundreds of terabytes when dealing with multiple LiDAR collects over time or when working with dense point spacing over a large collection extent. In an increasingly digital world, with information at our fingertips, the expectation for instant access to ever-increasing data coverage yields delays in delivering crucial information.

Effective LiDAR data compression requires an understanding of data structure and file format semantics. Much like the movie industry has created specialized MPEG compression and tools to squeeze out every last possible bit of data reduction while preserving high-definition (HD) video quality, LiDAR data compression algorithms seek to maximize compression ratios while retaining data integrity and point accuracy.

Exploiting predictable patterns and characteristics of point attributes”e.g., Global Positioning System time; x, y and z coordinates, or classification codes”can yield better results than just applying an all-purpose entropy-encoding or dictionary look-up method to a given dataset. For example, having a priori knowledge that a stream of classification codes may only contain values ranging from 0 to 12 enables users to create a much more efficient compression and/or indexing algorithm.

Optimizing Accuracy and Precision

A cost-effective way to reduce data size is simply saving data with appropriate precision. For example, consider precision as a function of spot size, which is largely a function of sensor distance from target and sensor beam divergence. Divergence typically ranges from 0.2 to 0.8 milliradians. In the case of airborne collections, target distance is determined by the laser's flying elevation and scan angle.

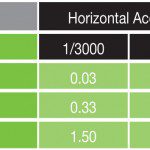

Table 1 illustrates typical minimum and maximum spot size in meters. The spot size determines the precision with which the horizontal location of a pulse return can be measured. Users often will insist on storing data in the highest precision available in a given format. It's easy to place x and y coordinates in a LiDAR Data Exchange (LAS) file with enough digits to represent 1 centimeter.

This approach is pointless, however, if the data are collected with a 50-centimeter spot size. Imagine claiming to measure a distance in feet while using a vehicle's odometer”the odometer's unit of measure simply doesn't support the desired precision. Therefore, using an appropriate unit of measure to record location potentially reduces data storage without real data loss.

Horizontal accuracy also is a function of sensor distance to the target. Advertised accuracy typically ranges from 1/3,000th to 1/5,500th the distance. Table 2 illustrates typical accuracies that can be expected. Although accuracy is independent of precision, it should be considered when selecting the optimal precision with which to store data.

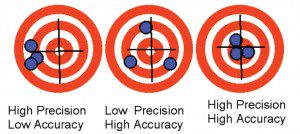

Figure 1 illustrates precision and accuracy in the context of a reported location of a LiDAR pulse. In all three cases, the location of the three targets will be reported as the center of the laser spot. Smaller spots yield higher precision. Although systemic or bias errors can be compensated for during post-processing, random errors introduced by moving parts and other dynamic aspects of the system cannot.

Understanding Lossy vs. Lossless

There are three levels of lossiness for LiDAR compression. The first is numerically lossless. This means the decompressed data will be byte for byte identical to the original. Although this is achievable, it dramatically limits compression rates.

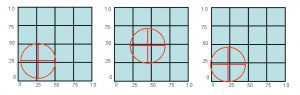

The second level is what is commonly termed visually lossless. LiDAR data typically are referenced using the Universal Transverse Mercator (UTM) projection, which is a grid. Any grid system will suffer quantization error. Figure 2 illustrates the affects of quantization error on a LiDAR pulse measurement. In this example, a spot size of .45 meters is used in a .25-meter quantization cell size. The top grid shows a spot that's just inside the cell that would be recorded as .375x and .375y meters offset from the origin.

Although the actual location of the spot in the middle grid has moved .35 meters northeast, it will still be reported as a .375 offset because it's still in the same cell. The spot in the bottom example has moved a relatively small distance, but it will be reported as having a .125 offset for x and y because it has crossed into another cell. The maximum quantization shift is half the cell size. If the cell size used is no more than half the spot accuracy, the reliability of the data remains unchanged.

The third level employs lossy techniques in much the same fashion as raster imagery. Just as some degree of lossiness is acceptable in JPEG image compression to transmit the image over a network, further reducing LiDAR point cloud precision may prove appropriate in some cases to facilitate rapid dissemination. Leveraging the special characteristics of the point-attribute field types defined in the LAS file can also help to reduce the size of a compressed LAS file up to an additional 40 percent.

Point Cloud Seeding Shrinks Storage Needs

Leveraging more than a decade of LiDAR experience, PAR Government Systems has been analyzing LiDAR data structures to support development of an algorithm to reduce storage requirements for LAS files that yields an 80 percent reduction in storage requirements. Based on a light-sensitive data structure with built-in compression and decompression, the company's Silver*Chloride Aggregate Granular Cloud Library (AgCl) toolkit is intended to seed LiDAR point clouds.

Testing has been performed on numerous datasets, including samples provided by the BuckEye program, high-density terrestrial LiDAR data from the U.S. Geological Survey and digitized full-waveform collections from the National Oceanic and Atmospheric Administration. Actual results have been comparable to those attained with open-source software solutions, such as LASzip.

In addition to compressing data, a database indexing structure has been implemented to support query and retrieval of a variety of LiDAR data subsets based on geographic locations or user-desired positional (xyz) parameters, as well as temporal delimiters. The database feature also has been extended to include query syntax specific to user attributes of interest.

A compressed database storage structure lends itself well as an aggregator of all a user's LiDAR collections into a single repository. This is an important factor for compression performance, because initial testing has revealed that compression increases with point density.

The Silver*Chloride solution provides an application interface to perform queries to identify the clouds of interest and then export those points with selected attributes and a specified quantization, yielding a desired compression. This database structure supports query and retrieval of a variety of LiDAR data subsets based on geographic locations or user-desired positional (xyz) parameters, including standard boolean delimiters. The database feature set can be extended to include query syntax specific to user attributes of interest.

New solutions such as Silver*Chloride provide users with the ability to quickly and effectively retrieve large datasets to perform analysis on only a relevant area of interest without the need to manipulate unnecessary information. With continued deployment of data collection platforms producing waves of data, as well as the desire for instant access to pertinent pieces of data, how users manage and manipulate big data will become crucial to the usefulness of these systems.