By Catherine Burton

Summer is a time to relax and enjoy the warm weather or wade into the water on the shores of a welcoming lake. But just beneath the peace and tranquility lies data; measurements of increasingly hot weather, drought, mud slides, consumer spending and traffic.

Businesses and public agencies are collecting data points at all times, from an ever-increasing number of sensors connected and remote, all over our planet. The Landsat 7 and 8 satellites alone collect 1,200 images per day at approximately 1GB per image, and the Copernicus Program Sentinel fleet soon will produce 10TB per day of free and open data.

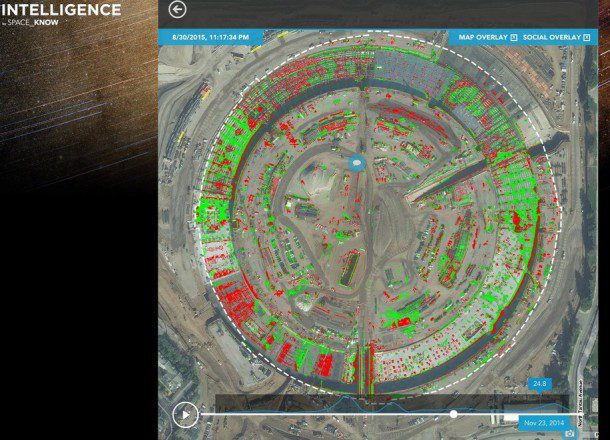

Spaceknow Analytics uses change detection to map construction at the Apple Campus. (Credit: Spaceknow & DigitalGlobe)

Remotely sensed imagery, combined with location data collected locally or via connected devices, exponentially compounds the ways to analyze spatially.

A few years back, 20 million geospatial records were considered a lot of data, says Ragi Burhum, CEO of AmigoCloud. Tracking only 10 sensors (e.g., car, smartwatch, etc.) at one-second intervals easily generates more than 315 million records a year.

To help Earth observation (EO) at Big Data scales, teams outside GIS and remote sensing are increasingly rising to the challenge to understand data sources, management and processing.

GIS and remote-sensing skills don't often correlate with skills for building scalable systems, notes Robin Kraft, product manager at Planet Labs.

Those wanting to take advantage of such data should prepare to learn new skills or hire a different type of person than previously needed. To derive value from large, complex datasets, success may be found by balancing a deep knowledge of man/land relationships and savvy practical experience in best-in-class tools.

There is a clear trend in students in [remote sensing] and geospatial graduate programs graduating with much stronger quantitative skills and programming capabilities than ever before, says Thomas Loveland, senior scientist at the U.S. Geological Survey (USGS).

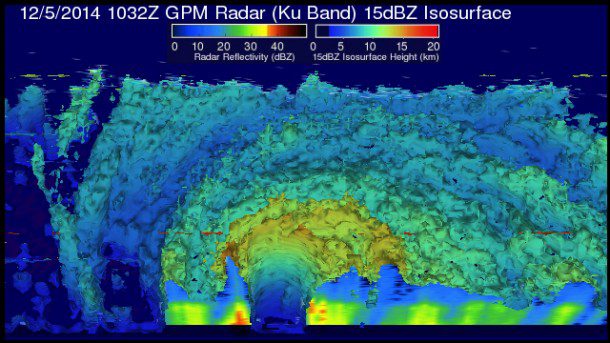

As an example of Big Data that need to be accessible as quickly as possible, NASA/JAXA's GPM/GMI satellite sensor monitored Typhoon Hagupit in 2014. (Credit: NASA/JAXA/SSAI, Hal Pierce)

As an example of Big Data that need to be accessible as quickly as possible, NASA/JAXA's GPM/GMI satellite sensor monitored Typhoon Hagupit in 2014. (Credit: NASA/JAXA/SSAI, Hal Pierce)

Defense and other government agencies have been generating information from remotely sensed Big Data for many years. As the Earth-observation market pivots away from defense and into the private sector, viable business models come into question. Defining market demand will be critical to industry growth.

There are three axes of improvement that will help grow the market beyond where it currently exists: 1) increases in available imagery, 2) improvements in automated processing and 3) improvements in analytics development, notes Alex Diamond, data and analytics lead at RS Metrics.

Data Sources

Remotely sensed data platforms include satellites, fixed-wing aircraft and ground platforms that can feature optical (multispectral and hyperspectral), radar (SAR) or laser (LiDAR) sensors. Legacy and startup companies alike face challenges transmitting data from sensors, exploiting ground-station networks, data timeliness and customer access. The value of remotely sensed imagery is directly related to its timestamp, and, in some sectors, there's a strong business case for getting imagery to those who need it most as quickly as possible.

Agencies such as the European Space Agency, USGS and NASA support open-data initiatives, giving those in science, resource-management and conservation fields the ability to use the data needed to solve a problem vs. the data they can afford, notes Loveland.

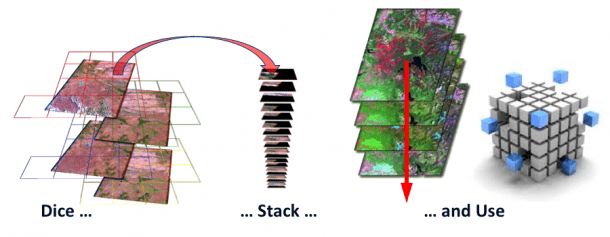

The Australian Geoscience Data Cube integrates a gridded data-analysis environment from decades of satellite and related data sources.

In 2015, Amazon Web Services (AWS) began serving Landsat 8 data across an API”a transformational moment in access to public satellite imagery. Within its first year, such data have been requested more than 1 billion times globally. Serving Landsat data on AWS is important for two reasons: 1) seamlessly downloading scenes via API is a low-cost, high-return workflow, and 2) it exposes satellite imagery to developers who may not have been previously aware of the dataset.

Open data is a tremendous step forward in distributing satellite imagery. For new startups accessing commercial high-yield growth markets such as tracking economic patterns, the availability of multispectral submeter-resolution imagery is lacking.

Although there has been much promise, the increase in available very-high-resolution imagery has mostly proceeded on a linear basis since the 2007 launch of DigitalGlobe's WorldView-1, says Diamond.

New imagery providers are driving down the cost per pixel, but they've had mixed success building archives and lack the accuracy and resolution of established players. There's an ever-increasing volume of imagery available for certain purposes, but perhaps not yet the promised proliferation of large-area-coverage, sub-meter, multispectral data needed to fully penetrate the commercial market.

Data Management

Remotely sensed imagery is a complex data source due to its nonlinear, multi-scale, heterogeneous and high-dimensionality characteristics. Software designers must incorporate methods for orienting image and range sensors, object reconstruction, separate and combined processing of image and point clouds, and 3D to multi-scale and n-dimensional data modelling.

Shapefiles or geodatabases were never designed for that magnitude of data, and most organizations that try to use traditional geospatial software quickly find that out, notes Burhum.

Although the standard tools in place to analyze geospatial Big Data aren't up to the task, risk taking is being rewarded.

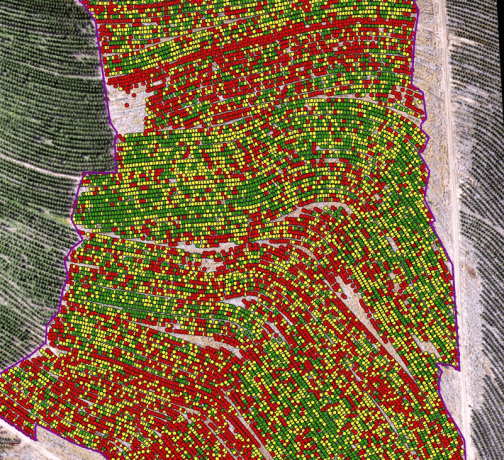

Intelescope Solutions assesses the inventories of commercial timber farms using machine-learning algorithms and DigitalGlobe satellite imagery.

Don't be afraid to misuse technology, adds Kraft. For example, converting geotiffs to webmap tiles living on [an AWS] can be a convenient and simple way to parallelize subsequent operations in a workflow. And using low-cost open-source software is a must. The quality is excellent, and it won't blow up your budget when you need to run it on 1,000 cloud servers for a few hours or weeks or months.

Cloud computing may address the data-management problem; but data processing and analysis will require new, inventive software to accommodate the unique characteristics of multiple sensors and data sources.

Data Processing

Desktop image-processing professionals have always used machine-learning algorithms (e.g., convolution filtering, nearest neighbor, etc.) to normalize remotely sensed imagery and extract value.

Remember: rasters are just arrays of numbers [with] metadata, and raster and vector data can be expressed as easily digestible tabular formats, reminds Kraft. General-purpose technologies like Hadoop and Spark can work wonders with geo data expressed that way. We did this for Global Forest Watch's FORMA algorithm using a standardized grid, and used Hadoop to burn through it all. It wasn’t necessarily computationally efficient, but it scaled like crazy, and we didn’t have to think much about standard GIS operations. It was very freeing!

Hadoop and Spark are open-source frameworks for storing and processing Big Data in parallel on large server clusters, removing some of the complexity of parallel computing. Advancements in deep learning from gaming and computer vision have benefited automated image-processing methods, although deep-learning algorithms can be time consuming due to required pre-processing, training and georectification.

Aligning pixels from disparate sources is being tackled by the Committee on Earth Observation Satellites and USGS, which have been building an open-source data cube modeled on one developed by Geoscience Australia and the Australian Space Agency.

Big Data, Big Problems?

Scientists establish accuracy and credibility by designing empirical studies and having research papers peer reviewed. In the private sector, defining accuracy and credibility is critical to the success of any profitable business enterprise.

Big Data does not excuse or change the requirements for rigorous quality control, says Kass Green, president of Kass Green & Associates. Independent variables still need to be thoroughly reviewed for completeness, bias and accuracy.

However, given the volume of information processed, one must establish rules as to where generalizations can be made vs. where precision is required.

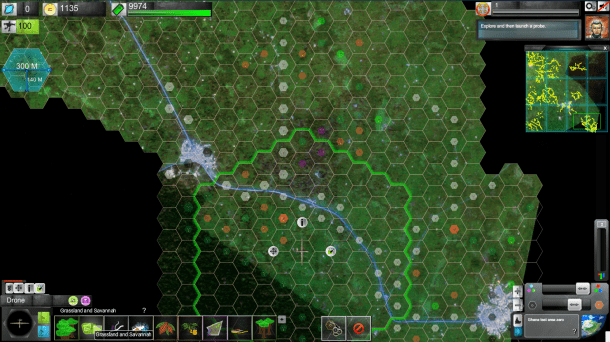

In Cerberus: Forest Falcon, Facebook-based crowdsourced players create a baseline map using Sentinel-2 data with a goal to preserve rain forests and help local economies. (Credit: BlackShore & ESA)

Get creative about shortcuts, adds Kraft. Think about where you really need accuracy, and where you can simplify and speed up an operation at reasonable cost to the quality of output.

When delivering results, understanding exactly how the information was produced from source to solution can be tracked with metadata. There will need to be standards-based services to map one metadata set to another, says Kevin Howald, geospatial collaboration manager at Harris.

Applications

The most exciting piece of this technology puzzle remains the real-world applications. Using medium-resolution Earth-observation Big Data, researchers are sharing information on climate-change indicators and making predictions that impact policy and the lives of ordinary people worldwide.

Use of the entire archive of [Landsat’s more than 6 million scenes at the USGS EROS Center] allows the extraction of temporal signals, such as land-cover change or ecosystem health, that were not previously discernible, notes Gary Geller, senior expert for biodiversity and ecosystems, Group on Earth Observations.

Esri's Change Matters (changematters.esri.com/compare) uses global, multi-year Landsat imagery to show vegetation change. This type of simple, yet highly visual application often serves to ignite the imagination of problem solvers outside the industry.

High-resolution Earth-observation imagery also is being marketed and sold to financial services and insurance companies to track consumer spending and assist with claims processing. Real-time image processing onboard drones helps soldiers on the ground survey the surrounding territory before reallocating resources. And to fuel market competition, a greater effort must be placed on providing image-quality and explanatory information to non-experts.

The real profits will be made by firms who concentrate on solving clients' problems instead of pushing out technologies that may not be needed, says Green.

Selling valuable products to paying customers, some of whom know Earth-observation imagery only from Google Earth, requires additional levels of effort.

A ton of improvements need to be made in developing actionable analytics for decision makers who are most often executives in industries that aren’t used to using geospatial data as an input, notes Diamond.

Cloud architectures, open-source software, creative image-processing developers and a marketplace eager to integrate Earth-observation and location-based datasets to verify assumptions and predict trends continue to propel the industry forward. The ever-present collection of geospatial and Earth-observation data derived from people, places and things as they change (or not) will help us understand our past, present and future in relation to the planet and each other.

Catherine Burton is a senior business development professional at Southland Spatial; e-mail: [email protected].