Efficiently managing and exploiting the growing volume of video data is becoming easier thanks to open-source and commercial software advances.

By Mark Kozak and Ed Bohling, PAR Government Systems (www.pargovernment.com), Rome, N.Y.

Ever since German engineers employed a form of closed-circuit television to monitor V-2 rocket tests in 1942, streaming video has been used to collect actionable information. During the next 70 years, advances in sensor technology, miniaturization, mass production and data compression have made video streaming ubiquitous and cost effective. Developers of analysis applications have continued to push the envelope of what's possible.

Analyzing streaming full-motion video (FMV) now is a well-established way to extract information for many geospatial-based applications and disciplines. But as geospatial professionals sift through volumes of video, along with derived imagery and metadata, they're forced to ask themselves which tools are the best for the job.

Although it's unlikely a city government will buy a multimillion-dollar unmanned aircraft system (UAS), other repurposing already has begun. In a press release datedAug. 9, 2012, Texas Rep. Henry Cuellar announced U.S. Customs and Border Protection analysts are evaluating how to repurpose surplus aerostats, which provide persistent, wide-area border surveillance.

Even on a much smaller scale, streaming video collection capabilities continue to be deployed. Consider all the traffic and Web cams, even in small cities. Unfortunately, the affordability and relative ease of installation is outpacing the capacity to efficiently manage and exploit the growing volume of data, which is affecting analysis by large and small government organizations as well as preventing users from extracting even the most relevant information collected from their video sources.

A Key Ingredient: Metadata

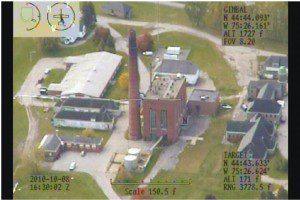

Being able to watch a scene in real time is just the beginning; today's streaming video contains a wealth of metadata describing each scene. Metadata are only limited by imagination. Examples of metadata currently collected are sensor elevation, geographic bounding coordinates, camera angle, sensor type or camera focal length. Such metadata are synchronized with each video frame for easy correlation, and they can be updated for each frame, or every few seconds, as appropriate for the video's purpose.

The metadata included with each FMV frame typically are presented as Key Length Value (KLV) data. KLV, the encoding standard approved by the Society of Motion Picture and Television Engineers, has been adopted by the National Geospatial-Intelligence Agency's Motion Imagery Standards Board (MISB), which has published numerous KLV dictionaries that represent a rich set of geospatial metadata to be associated with video frames. Many of these dictionaries are available to the public to facilitate interoperability.

Although there are several ways to include metadata, the use of KLV triplets is one of the more effective and extensible approaches. Using KLV presupposes that all parties producing and using stream video agree on a specification or dictionary. The specification describes the metadata stream's content, starting with the number of bytes used as the key (1, 2, 4 or 16). The number of bytes for length follows the key. In addition, the dictionary must include a list of expected keys and a name, or description, of each.

MISB recommends the use of the MPEG-2 transport stream as the container to multiplex KLV with the actual video stream. There are a few widely available software applications that play streaming MPEG-2 video streams.

One open-source application that has gained much popularity is VideoLANClient (VLC), which plays streaming video and has many interesting features that include gamma and brightness adjustments. Unlike products such as PAR Government Systems' GV 3.0, however, VLC doesn't decode KLV.

Addressing Licensing Concerns

A fact to consider when using any open-source, commercial or professional tool is that MPEG technologies are patented, which means patent royalties must be paid to the MPEG-LA consortium for their use.

Many FMV visualization software applications use an open-source software (OSS) package named FFmpeg, which provides reliable coding and decoding (codec) libraries. The FFmpeg libraries typically are used to develop custom software applications that process FMV with KLV. FFmpeg use still requires users to account for MPEG-LA royalty payments and licenses.

FFmpeg is available under either the GNU General Public License (GPL) or Lesser GPL (LGPL) licensing model, depending on how the software is compiled. Using the GPL option, the software application can encode videos using the advanced H.264 compression, which supports greater bits-per-pixel and enables other powerful features.

Doing so, however, brings along the copy left requirement, which means any software application linking to a specific FFmpeg build must be made available in source form under the same license. The LGPL option eliminates this requirement to make your value-added software open source, but it prevents the use of the H.264 encoder and other advanced features.

Assessing Additional OSS Issues

Even after licensing concerns are addressed, users still may encounter OSS issues. Generally, the OSS community does an excellent job reviewing contributions and maintaining the baseline; however, many user organizations require developers to provide a full warrantee on all OSS components used.

One aspect of this requirement is full compliance with Security Technical Implementation Guides (STIGs) published by the U.S. Defense Information Systems Agency. The requirement's goal is to minimize security risks and vulnerabilities. Complying with the applicable STIG isn't an easy task. Packages such as FFmpeg are many tens of thousands of lines of complex code. Reviewing, testing and fixing vulnerabilities can be costly.

If you're going to develop your own video solution using FFmpeg, and you need to deliver a stable and compliant product, be prepared to spend a lot of time reviewing code, testing, documenting testing methodology and results, and completing licensing paperwork.

This isn't to say that FFmpeg or other OSS shouldn't be used. OSS is a great resource and can save considerable time and money, but ready-to-use alternatives, such as the GV 3.0 product line, already comply with these licensing and accreditation requirements.

Although many of the FMV functions described here aren't complex to implement, they simply aren't present in today's commonly used video visualization applications. When video crosses the threshold from entertainment to information extraction, specialized software is essential for optimally exploiting and managing that information.

FMV in the Future

As FMV collection sources continue to grow exponentially, so will the need for robust data management, processing, archive, retrieval and visualization. Adhering to existing and new metadata standards and developing secure applications are critical to the sustained, effective use of video data by analysts attempting to extract actionable information.

Pixel Data Provide a Wealth of Information

Video pixels reveal more information than expected if the right tools are applied, such as the suspicious-looking black backpack just behind the truck's tire in the image at right.

Today's streaming video can contain much more information than meets the eye. Digital video comprises pixels, much like still imagery taken with a digital camera. With ever-increasing camera sensitivity, video pixels reveal more information than expected if the right tools are applied.

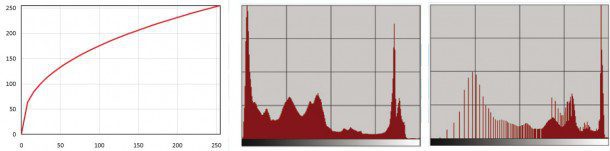

A logarithmic function (left) was applied to the frame of the van above. Histograms of the original (middle) and manipulated frame (right) show how the pixels were spread out to lighten the dark areas without losing too much data in the lighter regions.

The photos above show an example video frame as viewed from a typical surveillance camera at left and the same video frame with a normalized logarithmic histogram expansion applied at right. The expansion makes the suspicious-looking black backpack clearly visible, just behind the truck's tire.

This is possible because even though human vision may not see the details, the information is present in the pixels. In this example, an 8-bit monochrome video, the pixel values can range from 0“255, representing black through full white. The pixel value range of the backpack and nearby shadow is 2“9.

There isn't enough difference from one pixel to the next for the eye to detect a change. Passing the pixels through a logarithmic function represented in the figure above left increases the value differences in the dark areas without losing too much data in the lighter regions. The figures above middle and right present histograms showing how the lower pixel values were spread apart by the function.

This result isn't quite the same as a simple brightness and contrast adjustment. Adjusting brightness and contrast would simply produce a more washed-out image and would still fail to reveal the desired detail. Similarly, an exponential function can be used to bring out details in the video's bright light regions. Even more detail can be extracted if a camera or sensor with a bit depth of more than 8 bits per pixel is used.